NATURAL LANGUAGE PROCESSING (NLP) Basics with spaCy (Part 1)

In this article you’ll learn about the basics of Natural Language Processing(NLP) such as Tokenization, Lemmatization, Stopwords, Part of speech (POS)tagging, and more, using the spaCy library.

Text data as we all know can be a rich source of information if it can be efficiently mined and processed.

According to Statistics from the link below 👇

In the year 2019, 26 billion texts were sent each day by 27 million people in the US.

You wouldn’t want to start a journey without knowing your destination? 😎let’s talk a bit about what NLP and its uses before move into the spaCy Library.

What is NLP?

NLP is an area of computer science and artificial intelligence, concerned with the interactions between computers and humans (natural languages), in particular how to program computers to process and analyze large amounts of natural language data.

NLP can simply be said to be the ability of a computer to read and understand human languages.

Uses of NLP

- Classifying emails as legitimate or illegitimate

- Sentiment Analysis

- Movie reviews

- Understanding text commands e.g ‘hey google, play this song’

Now that we know what NLP is, and what it is used for, let dive into the spaCY library.

Why spaCY?

spaCy is an open-source NLP library for python developed by explosion.ai in 2015. it was designed to effectively handle NLP tasks efficiently. spaCY is faster and much easier to use, however, it doesn’t include pre-created models for sentiment analysis.

The figure below shows the speed comparison of spaCY to other NLP libraries.

Getting Started

pip install -U spacy

To install the English language model

python -m spacy download en_core_web_sm

It should take a while, so you can grab a cup of coffee ☕️ or go do some other work e.t.c while the English model is installing. when it has been successfully installed, it would show “linking successful”.

let’s go ahead and import spaCy.

#import spacy and load the english language model

import spacy

nlp = spacy.load("en_core_web_sm")Note: the English language model must be loaded alongside spaCy to allow spaCy to perform Language processing in English.

Tokenization

is the process of breaking down a text into components or pieces. these pieces are called tokens. when performing tokenization we should note the following: prefix, suffix, infix, and exceptions. we don’t have to worry too much about that because spaCy has a built-in tokenizer that does the job for you.

let’s get our hands dirty with some codes

Code:

"""A doc is a sequence of tokens, a doc can be an article, a newspaper or a tweet

'u' is a unicode string, it is the industry standard for handling text."""doc = nlp(u"NLP isn't the same as Neuro-linguistic programming.")for token in doc:

print(f"{token.text}")

Output:

As we can see, spaCy is smart enough to distinguish between the prefix, infix, and suffix.

Stemming

Stemming is the process of reducing words to their root forms (stem) such as mapping a group of words to the same stem even if the stem itself is not a valid word in the Language.

The most common algorithm for stemming English is Porter’s algorithm which was developed in 1980.

Although spaCy doesn’t have a library for stemming, it uses a better approach called lemmatization to reduce words effectively to their root forms.

Lemmatization

is the method of reducing a word to its root or base form (lemma). unlike stemming which follows an algorithm to chop off the words, lemmatization takes it a step further by looking at the surrounding text to determine the given word part of speech.

the lemma of running is run, while the lemma of eating is eat. again spaCy does this under the hood, so you don’t need to worry.

Note: the difference between “lemma” and “lemma_” in the figure above. “lemma_” returns the lemma while “lemma” returns the hash values of the lemma.

If you’re confused 😵and want to know more differences between lemmatization and stemming click here

Part-of-Speech tagging

part-of-speech tagging or POS tagging is the marking of a word in a text or document to a particular part of speech(noun, verb, adjective) based on its definition and context. for additional knowledge on POS, tagging click here.

spaCy can parse and tag a given text or document. spaCy uses a statistical model, which enables it to make predictions of which tag or label most likely applies in this context.

Code:

import spacynlp = spacy.load("en_core_web_sm")

doc2 = nlp("Apple is looking at buying U.K. startup for $1 billion")for token in doc:

print(f"{token.text:10} {token.pos_:10} {token.tag_:10} {spacy.explain(token.pos_):15} {spacy.explain(token.tag_)}")#Note the use of underscore

Output:

- Text: The original word text.

- POS: The simple part-of-speech tag.

- Tag: The detailed part-of-speech tag.

Stop words

commonly used words such as ‘I’, ‘you’, ‘anyone’, appear so often in a document and as such cannot be tagged as nouns, verbs or a modifier.

spaCy has a built-in list of over 300 English stop words that can be used to filter out unnecessary words in a document. spaCy also allows a user to add custom stopwords, not in the spaCy’s built-in list.

Code:

#To get the list of spaCY built-in stop words

print(nlp.Defaults.stop_words)Output:

Note: caution should be taken when removing stop words from a document, as shown in the figure above. if the sentence itself is a stopword it would lose its meaning.

Visualizing Parts-of-speech and Named Entities

spaCy comes with a built-in dependency visualizer called displacy, which can be used to visualize the syntactic dependency (relationships) between tokens and the entities contained in a text.

displacy can be viewed either in Jupyter notebook

Code:

from spacy import displacydoc4 = "The Bus arrives by noon"displacy.render(doc4,style='dep')

Output:

We can play around by changing the style argument from ‘dep’ to ‘ent’ to visualize Named Entity using displacy.

Code:

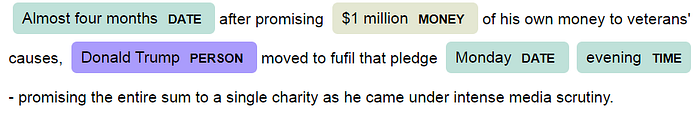

doc5 = nlp("Almost four months after promising $1 million of his own money to veterans' causes, Donald Trump moved to fufil that pledge Monday evening - promising the entire sum to a single charity as he came under intense media scrutiny.")displacy.render(doc5,style='ent')

Output:

Conclusion

We have come to the end of the first part in the NLP basics using the spaCy library in the next part we would go over Named Entity Recognition(NER) and Sentence segmentation.

I hope you found this tutorial interesting. Please share and remember to comment with your suggestions or feedback.

Don’t forget to follow me for posts on Data Science and AI.

Cheers!!!

Reference

[1] Jose Portilla, Natural Language Processing with Python

[2] https://SpaCy.io